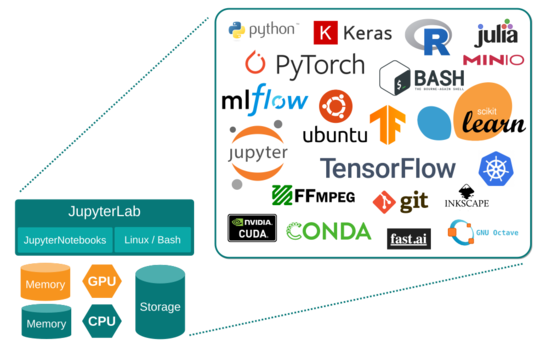

GPULab provides fully configured JupyterLab environments built to work seamlessly with their attached NVIDIA GPUs and CUDA APIs. GPULab environments come pre-installed with the most popular Data Science, Machine Learning, and Deep Learning libraries, including TensorFlow, PyTorch, Keras, Scikit-learn, and fastai, along with all of their dependencies (see GPULab jl3-v2.1.x for details). GPULab users may add additional libraries to support uniq projects or custom libraries shared across an organization. However, GPULab’s heavily provisioned environment means uses will likely have everything they need to get their project started immediately. GPULab’s holistic approach mitigates the need to determine a language, libraries, or frameworks before beginning a project. GPULab projects may easily contain statistical code in R and ML experimentation in PyTorch or any combinations.

GPULab builds upon the official NVIDIA CUDA container, an Ubuntu-based distribution providing the CUDA Toolkit, everything you need to develop GPU-accelerated applications. GPULab extends NVIDIA’s container with JupyterLab, numerous language run-times, and compilers, dozens of Linux applications useful for data manipulation, data transfer, and communication with external systems.

GPULab environments run in-process isolated containers, managed by the Kubernetes distributed application cluster, providing high availability, encrypted communication and secure network policies, and private persistent data volumes.