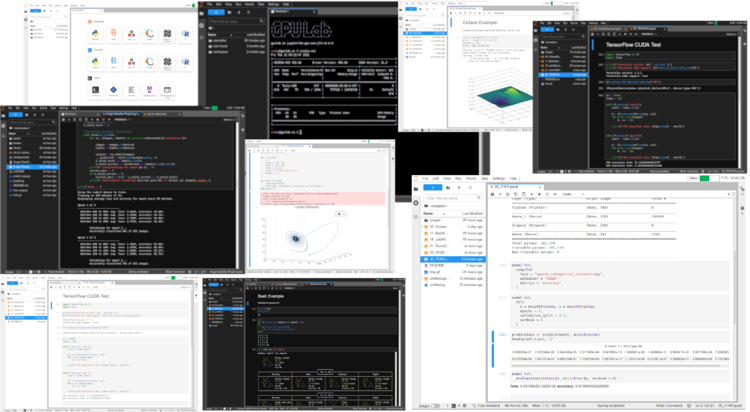

UNLIMITED GPU

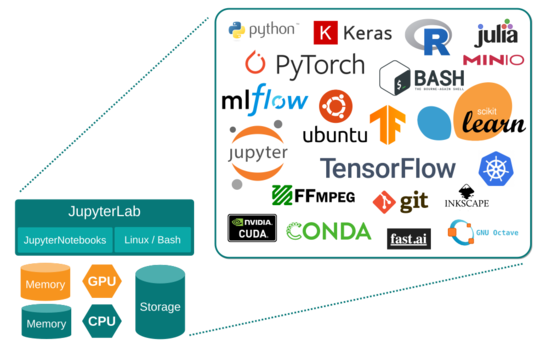

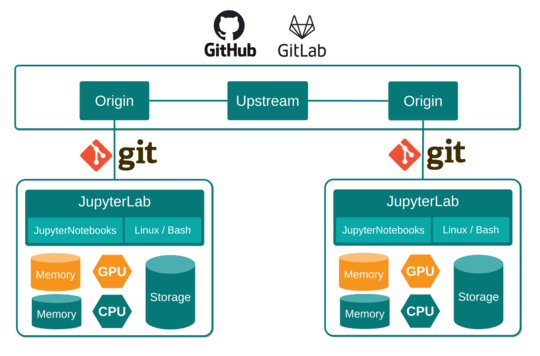

GPULab is a turnkey JupyterLab Notebook environment atop a feature-packed Ubuntu Linux operating system. GPULab provides data scientists and research teams a dedicated Nvidia K80 GPU for a flat fee.

Stop stressing over the high-cost of hourly GPU billing offered by other Deep Learning providers and harness the power of UNLIMITED GPU compute for all of your projects with one of our generous daily, weekly, or monthly subscriptions.